Research

Broadly, my research interests include Machine Learning, Computer Vision and Analytic Combinatorics.

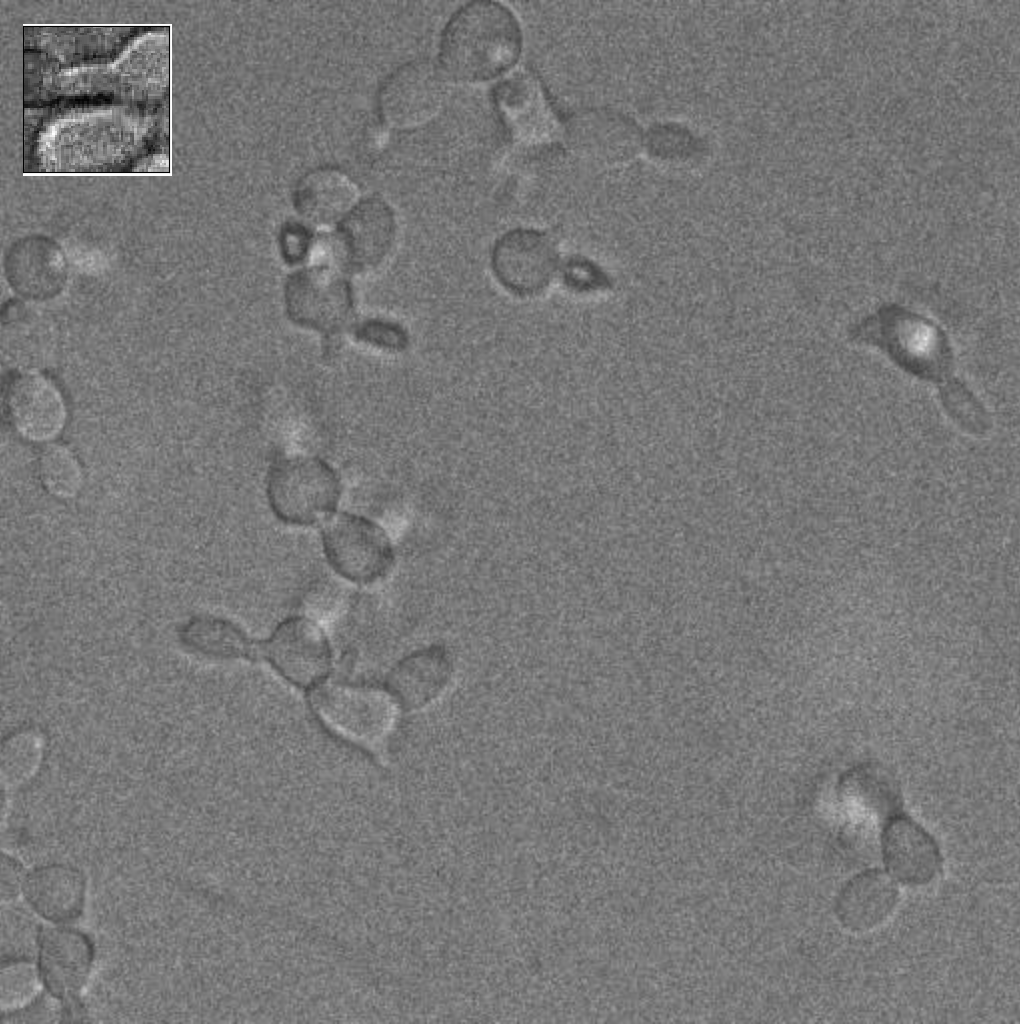

CNN Based Yeast Cell Segmentation in Multi-Modal Fluorescent Microscopy Data

This project involves using state-of-the-art techniques for image segmentation in high-noise cellular microscopy data. My work involves exploring current techniques including Conditional Random Fields, Edge Detection, Watershed Algorithm and Spectral Clustering and some open-source pipelines such as CellProfiler for image segmentation.

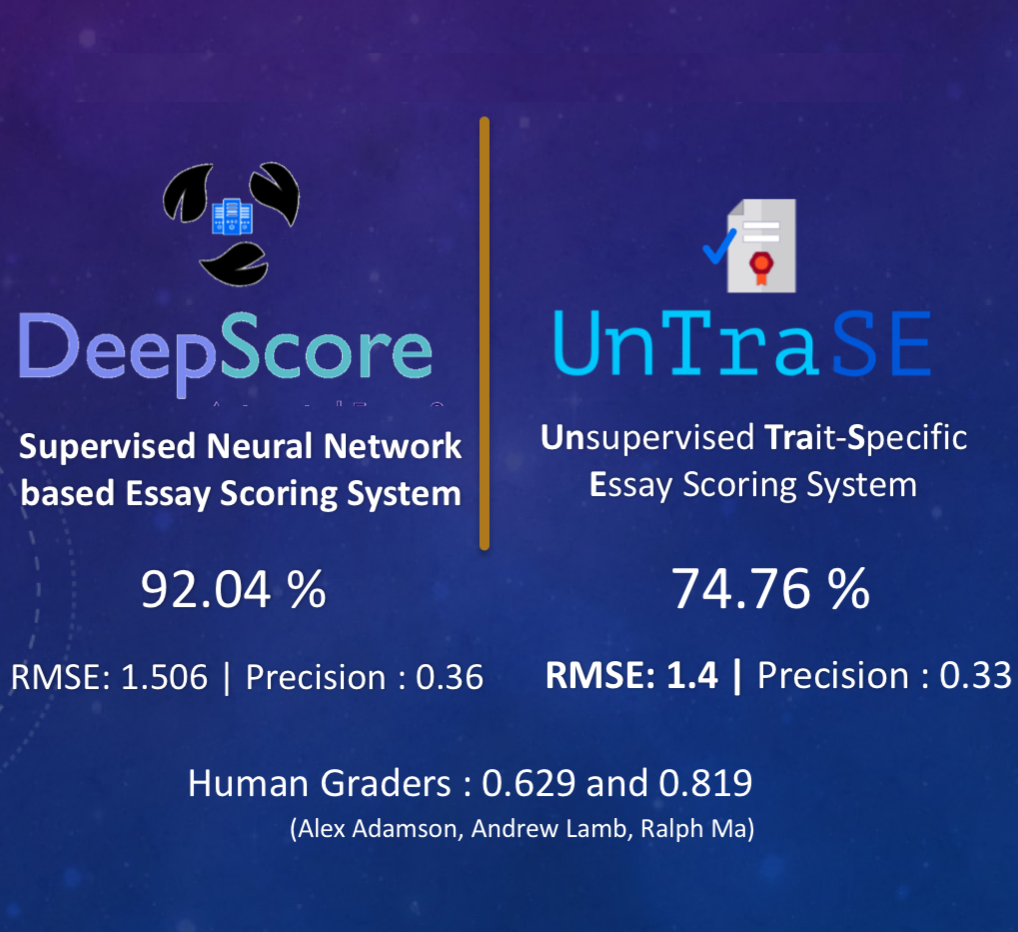

A Two-Fold Exploratory Study on AES

The present research has been limited to rely heavily on extracting statistical features to evaluate and score essays through training on huge datasets. This makes it impossible for pri- mary school teachers to use such systems for grading. Moreover, until recently, even the systems which involve training on huge datasets yielded average results. We use LSTMs and feed-forward neural networks and devise a supervised system which improves the state-of-the-art system, and also devise an unsupervised system which perform as good as many recent supervised systems.

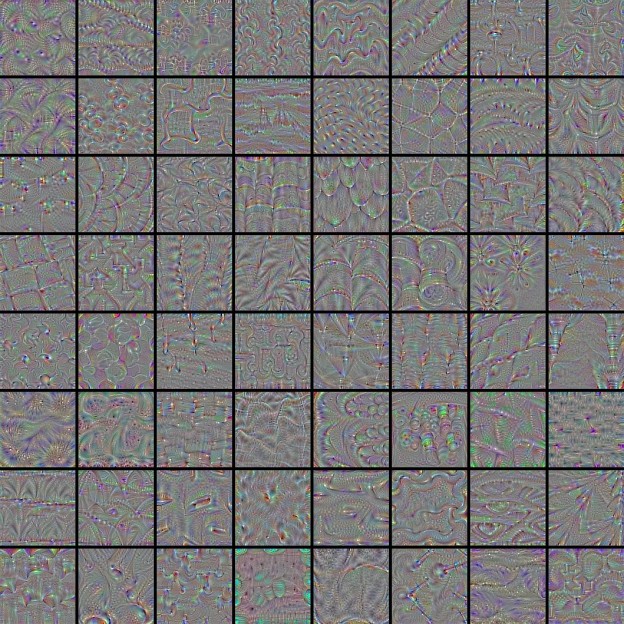

Video Thread Background Scene Classification with VGG16 Features Using Convolutional Neural Networks

This research project aimed at using the power of deep learning networks to implement a classification model. I implemented a convolutional neural network that runs the logistic regression classifier to map the feature vectors obtained from VGG16 to the scene categories of a famous TV Show.

A Socio-economic Assessment of Climate Vulnerability

Climate change poses real and present danger to the future of the world. Also at stake is practically every major life form’s existence that resides on this planet. We devised a time-series model on the socio-economic factors contributing to climate change, and produced several visualizations which establish relations between several socio-economic factors. More generally, we have created an educative interface which provides visualiza- tions to trigger climate-aware thought-processes and provides some useful insights through basic statistical and machine learning methods.

Mosaicing of Aerial Images - Estimating transformations from the image points

The goal of this was to build a system that maps the correspondences in two images, and warps one image according to a reference image, and merges both of them into a mosaic which provides a larger FOV (Field of View)

Analysis of Neural Network architectures for Supervised Classification

The goal of this research project was to analyze the performance of various types of neural network architectures including Feed-forward Neural Networks and Competitive Networks over a supervised classification problem using various metrics.

First-order Theorem Proving - Which Features Impact the Most ?

The aim of this project was to find out simple feature measurements of a conjecture and axioms that sufficiently provide information to determine a good choice of heuristic. Our dataset consists a set of 5 heuristics, each having results from 14 static feature measurements and 39 dynamic feature measurements. The basic units affecting the features include the set of processed clauses, the set of unprocessed clauses and the axioms.

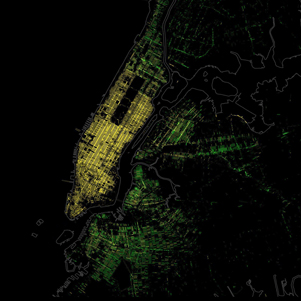

The freefinity Project: Analysis of NYC Cab Services

This project is a part of a larger research that I am currently into. Using state-of-the-art big data analytics and data mining techniques over a dataset of around 77,414 Megabytes, this project aimed at performing an analysis of commuting patterns, neighborhoods, traffic, tipping patterns, taxi fares (and more) in urban communities. The purpose is to extract useful insights so that any solutions that we derive can be mapped to other large cities.

Sentiment Analysis over Common Crawl Corpus Using Amazon Elastic MapReduce (EMR) with AWS S3

Analyzed over 2,500,000 Megabytes of data on Amazon CommonCrawl using Amazon EMR on an S3 bucket. The project involved finding patterns where people express their feelings as "I feel". Different words that indicated a particular sentiment were collected and grouped using Spark.

An Experimental Comparison of Memory Bounded & A* Algorithms

The goal of this experiment was to implement and record the running times, depth in the search space of path-finding algorithms. Using Manhattan Distance and NMT Heuristics, our results provide a holistic comparison of the performances of both of the algorithms on the famous 8-Puzzle and 15-Puzzle Problems.

Classification of CKD Cases Using Multivariate K-Means Clustering

The automated detection of diseases using Machine Learning Techniques has become a key research area. Although the computational complexity involved in analyzing a huge data set can be extremely high, nonetheless the merits of getting a desired result surely count for the complexity involved in the task. In this paper we adopt the K-Means Clustering Algorithm with a single mean vector of centroids, to classify and make clusters of varying probability of likeliness of suspect being prone to CKD. The results are obtained from a Real Case Data-Set from UCI Machine Learning Repository.

Publications

CNN Based Yeast Cell Segmentation in Multi-Modal Fluorescent Microscopy Data

On Proving A Special Decomposition of Numbers Using Complex Variable Theory

A Simplified Analysis To A Generalized Restricted Partitions Problem

Classification of CKD Cases Using Multivariate K-Means Clustering

Software & Open Source Contributions

Education & Experience

Stony Brook University

Birla Institute of Applied Sciences

-

First Division with Honors, Stood first in the class.

-

Several co-curricular awards. Details can be found out here

I did my high school from Delhi Public School and passed out with a Scholar Badge for academic excellence for seven consecutive years.

Tag Cloud

I believe a tag cloud provides a great description.

Nomura Securities, New York City

Coursework

At Stony Brook University, I have taken CSE-527 (Computer Vision) by Prof. Minh Hoai Nguyen, CSE-564 (Visualization & Visual Analytics) by Prof. Klaus Mueller, CSE-628 (Natural Language Processing), CSE-537 (Artifcial Intelligence) by Prof. Niranjan Balasubramanian, CSE-545 (Big Data Analytics) by Prof. Andrew Schwartz, CSE-537 (Fundamentals of Computer Networks) by Prof. Aruna Balasubramanian and CSE-548 (Analysis of Algorithms) by Prof. Rezaul Chowdhury

Here is a list if courses I took at Birla Institute of Applied Sciences. I took Soft Computing as my elective course.

Online / Independent Coursework and Certifications

I try my best to learn outside the classroom as well. I really like taking MOOCs time-to-time. They allow us to explore different areas without academic constraints.

Discrete Interference and Learning In Artificial Vision

Miscellaneous

-

I secured an All India Rank of 176 in Joint Entrance Screening Test, 2016

-

I was selected for the prestigious IIT-Bombay (Indian Institute of Technology - Mumbai) Research Fellowship Awards, 2015 under Dr. Niranjan Balachandran (Ph.D., Ohio State University & Harry Bateman Research Instructor at the California Institute of Technology)

-

I was offered admission to top Masters programs across the United States by several universities, including the New York University, Vanderbilt University, the University of Florida, Michigan Technological University, Arizona State University, Northeastern University, the University of Texas at Dallas and the University of Illinois at Chicago. I chose to attend Stony Brook University

-

I was awarded the prestigious top 0.1 % merit certificate in Information Technology by the Central Board of Secondary Education.

Art

I love art. Sometimes I wish I had gone to an Art School instead of a Math School. But that is fine. May be I'll go to one in future. For now, I paint whenever I feel like.

-

Vétiver - Something I came up with recently.

-

Artinate - Really old - Primary school stuff - I have tried to scan and upload my paintings and sketches on my wordpress blog.

-

I won the best painting award in the Annual Painting Competition organized by Bhartiya Art Education Society.

-

I have won over 50 certificates and medals in various Art, Craft and Painting competitions during my schooling at Delhi Public School.

For The Love of Literature

I like to write and read. Check out my blogs:

-

Castles In The Sea - I blog about travel, literature, art, and some random stuff sometimes.

-

I read Fiction (except for Sci-Fi, which is better left for Movies and the TV) and Non-Fiction. My personal favorites are "10% Happier" by Dan Harris, "Eat Pray Love" by Elizabeth Gilbert, "A Passage to India" by E. M. Forster, "Life is What You Make It" by Preeti Shenoy, "The Alchemist" by Paulo Coelho and "The Goldfinch" by Donna Tartt. I have to stop, the list goes on.

Get In Touch